Machine Learning (ML) is one of the many fields of study within artificial intelligence (AI) that is moving technology forward. It’s main objective is to develop algorithms that learn from datasets in order to perform a wide variety of tasks without explicit instructions from a user.

ML benchmarking is an integral part in the development of these algorithms, and researchers in the Georgia Tech School of Electrical and Computer Engineering are at the forefront, making major benchmarking contributions in AI systems, hardware design, autonomous machines, and more.

What is Machine Learning Benchmarking?

Benchmarking assesses algorithms and programs, identifying bugs or problems, and facilitating comparisons. This is typically done on a unified virtual program that simulates the end product in a controlled environment.

Algorithms are evaluated on many different criteria, including speed, efficiency, performance, and energy consumption, to give developers unified data. Benchmarking also allows for the improvement and reinforcement of algorithms where there may be vulnerabilities, as well as the establishment of new standards for future research.

Standardized Execution Traces

Backed by MLCommons, ECE Associate Professor Tushar Krishna is co-chairing the Chakra Working Group along with Srinivas Sridharan from Nvidia. The working group is focused on advancing performance benchmarking and co-design of AI systems by developing standardized execution traces, named Chakra.

Trace execution is the sequence of compute and communication operations executed when running AI code and can improve the optimization of AI models, systems, and software, as well as next-generation hardware.

Widely used Large Language Models (LLM) like ChatGPT rely on underlying AI workload systems to train and improve them. But it takes massive amounts of compute power and time to run these systems. This makes the training process impractical as it becomes overly expensive and time-consuming.

Diagnosing the bottlenecks in this process is equally as challenging.

Chakra trace executions will be an open and interoperable graph-based representation of AI/ML workloads that will standardize the mechanisms to define and share these important AI workloads without sharing an entire software stack.

The standardization will speed up the benchmarking process and enable easier data translation across a wide range of proprietary simulation and emulation tools, making things like the reproduction of bugs or performance regression on different platforms more efficient, ultimately leading to improved systems.

“Chakra provides the flexibility to concentrate on specific aspects of workload optimization in isolation, such as compute, memory, or network, and study the direct impact of these optimizations on the original workload execution,” Krishna said.

The working group has participation from major technology companies such as Meta, AMD, Intel, Nvidia, HP, IBM and Micron to collect data for the project.

Autonomous Machines

Autonomous machines are designed to run all aspects of operation by themselves. As they continue to enter the mainstream, it is critical to ensure their efficiency and reliability, which will be driven by higher performance and safety requirements.

“Almost every software, hardware, or systems segment used in autonomous machines has to meet functional safety and performance standards,” ECE Ph.D. candidate Zishen Wan said.

Wan, who is part of ECE Steve W. Chaddick Chair Arijit Raychowdhury's Integrated Circuits & Systems Research Lab, is benchmarking ML and autonomy algorithms in Autonomous Machine Computing in hopes of ensuring these standards are met before autonomous machines are deployed in environments with real-world consequences.

Wan’s research explores cross-layer computing systems, architecture, and hardware for autonomous machines, looking for faults that could result in negative outputs.

“If there is a fault in the software or hardware, then it may propagate across the stack and result in wrong output action,” he said.

Wan and his team members have a virtual game-like simulator that tests the algorithms on a variety of compatible machines, such as drones and vehicles, and platforms in real-world settings. The goal is to benchmark the robotics computing system performance and help patch the algorithms, so the autonomous machines are able to self-correct when presented with faults.

The algorithms, which are provided by companies and practitioners, are evaluated on how efficiently the platform runs the algorithm and how reliable it runs when facing of faults.

“We give the algorithm creators a toolbox,” Wan said. “If parts of the algorithm are inefficient or vulnerable to faults, we give them modifications or methodologies they can use to reinforce their product. We want them to be able to adaptively protect their systems and run efficiently.”

Hardware Design

As technology becomes more powerful, creating the hardware necessary to run it becomes increasingly time consuming and expensive.

ML has become an important tool in helping cut costs and save time in producing advanced hardware which at times can contain billions of transistors. With so many parts, design tools under the guidance of ML algorithms have become a common means of production.

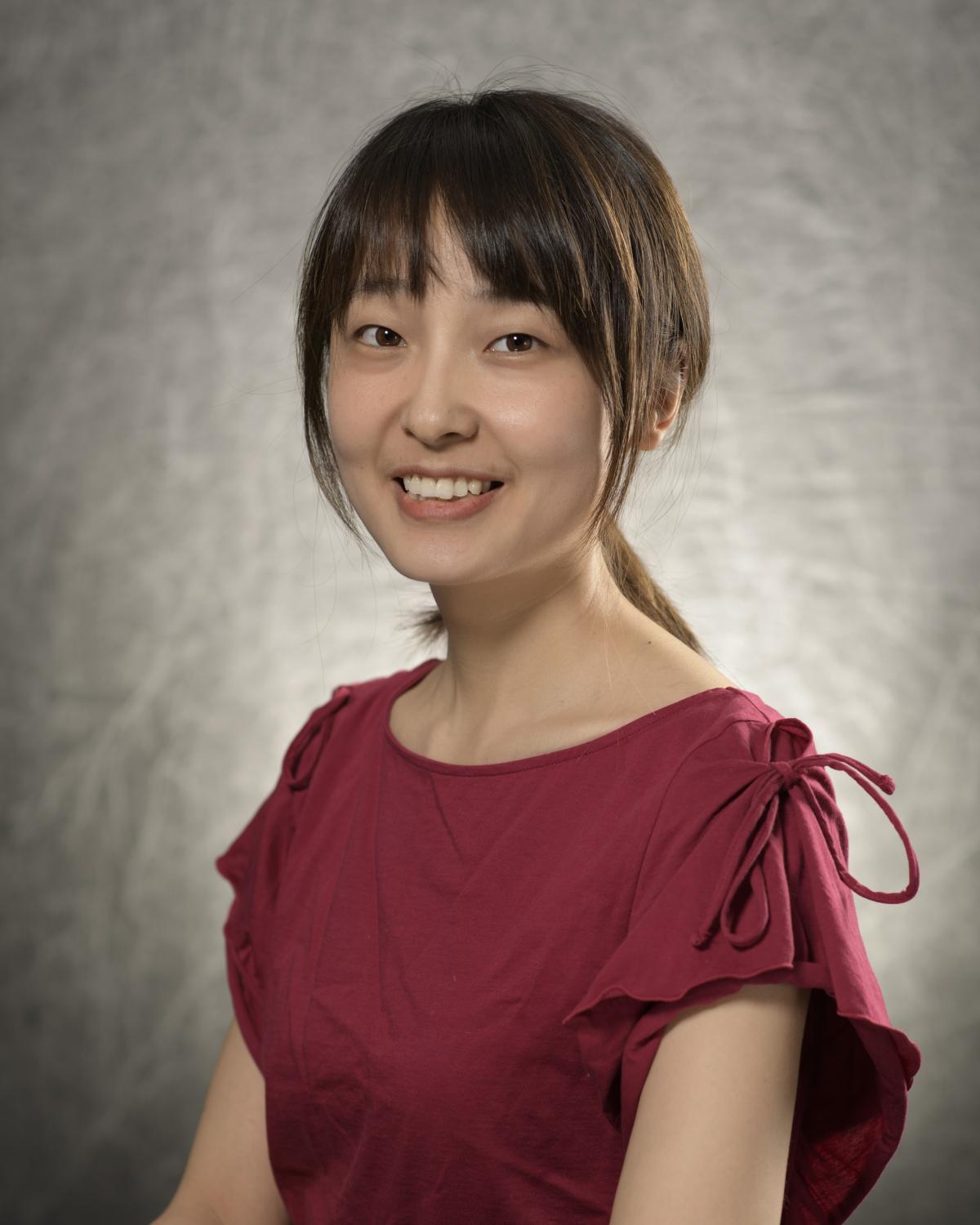

Assistant Professor Callie Hao and her team have a simulation that uses algorithms to create configurations of virtual hardware components. The simulation then evaluates each design on its speed and energy consumption

“We don’t actually have to the build the chip to know their effectiveness because it’s very expensive,” Hao said. “Just by looking at a simulation we know if it is good or bad."

Currently, benchmarking data is scarce. To enhance data collection, Hao’s research group created a platform allowing engineers to submit their algorithms as data.

“The more algorithms and hardware combinations we get, the richer data we’ll have to make definitive statements on the most effective designs,” Hao said.

With enough data they can expand the platform to create a publicly available infrastructure that will allow designers to not only benchmark their algorithms but also make them available for wider audiences to use and compare them without the need for domain-specific (hardware) knowledge.

“It will boost the practicality of ML algorithms to a broader application and also help hardware and tool designers use better ML algorithms to improve the quality and productivity of their own designs,” Hao said.

Related Content

Hao Wins NSF CAREER Award for Digital Hardware Design Research

Hao won the award for her next-generation High-Level Synthesis tool, named ArchHLS.

ECE Leading the Way in Smart Agriculture Innovation

From protecting crops to translating chicken clucks, researchers at the Georgia Tech School of Electrical and Computer Engineering are shaping the future of agriculture.