The research explores different methods to improve action recognition in videos using deep neural networks, specifically two-stream Convolutional Neural Networks (ConvNets).

A team of researchers, led by Professor Ghassan AlRegib in the School of Electrical and Computer Engineering, has been awarded the prestigious European Association For Signal Processing (EURASIP) 2023 Best Paper Award for their outstanding contribution to the Image Communication journal.

The award-winning paper, titled "TS-LSTM and temporal-inception: Exploiting spatiotemporal dynamics for activity recognition," was authored in 2019 by AlRegib and Assistant Professor Zsolt Kira in the College of Computing, as well as then-Ph.D. candidates Chih-Yao (Kevin) Ma (now a research scientist at Facebook) and Min-Hung (Steve) Chen (now a research engineer at Nvidia). The work was completed in AlRegib’s Omni Lab for Intelligent Visual Engineering and Science (OLIVES).

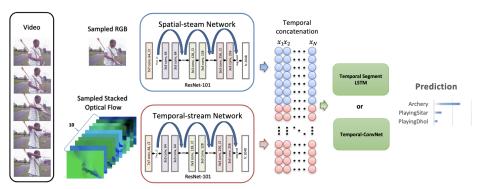

The research explores different methods to improve action recognition in videos using deep neural networks, specifically two-stream Convolutional Neural Networks (ConvNets). It investigates how to better capture the spatiotemporal dynamics within video sequences. The study proposes and evaluates two new network architectures: temporal segment RNN and Inception-style Temporal-ConvNet. These architectures aim to effectively integrate spatiotemporal information and enhance the overall performance of action recognition systems. The research also identifies specific limitations of each method, which could guide future work in this area.

The EURASIP Best Paper Award is bestowed upon exceptional papers published in journals sponsored by EURASIP within the past four years. This ensures that the most influential and vital ideas in the field are honored.

The Georgia Tech team will be honored at the European Signal Processing Conference (EUSIPCO) held in Helsinki, Finland, from September 4-8. EUSIPCO offers a comprehensive technical program addressing the latest developments in signal processing research and technology, along with its diverse applications.

EURASIP, founded in 1978, has been a crucial platform for researchers, providing an academic and professional forum to disseminate and discuss all aspects of signal processing, fostering growth and collaboration within the community.

Caption for photo header: Overview of the proposed framework. Spatial and temporal features were extracted from a two-stream ConvNet using ResNet-101 pre-trained on ImageNet, and fine-tuned for single-frame activity prediction. Spatial and temporal features are concatenated and temporally-constructed into feature matrices. The constructed feature matrices are then used as input to both of our proposed methods: Temporal Segment LSTM (TS-LSTM) and Temporal-Inception.